Twitter image encoding challenge [closed]

If a picture's worth 1000 words, how much of a picture can you fit in 140 characters?

Note: That's it folks! Bounty deadline is here, and after some tough deliberation, I have decided that Boojum's entry just barely edged out Sam Hocevar's. I will post more detailed notes once I've had a chance to write them up. Of course, everyone should feel free to continue to submit solutions and improve solutions for people to vote on. Thank you to everyone who submitted and entry; I enjoyed all of them. This has been a lot of fun for me to run, and I hope it's been fun for both the entrants and the spectators.

I came across this interesting post about trying to compress images into a Twitter comment, and lots of people in that thread (and a thread on Reddit) had suggestions about different ways you could do it. So, I figure it would make a good coding challenge; let people put their money where their mouth is, and show how their ideas about encoding can lead to more detail in the limited space that you have available.

I challenge you to come up with a general purpose system for encoding images into 140 character Twitter messages, and decoding them into an image again. You can use Unicode characters, so you get more than 8 bits per character. Even allowing for Unicode characters, however, you will need to compress images into a very small amount of space; this will certainly be a lossy compression, and so there will have to be subjective judgements about how good each result looks.

Here is the result that the original author, Quasimondo, got from his encoding (image is licensed under a Creative Commons Attribution-Noncommercial license):

Can you do better?

Rules

- Your program must have two modes: encoding and decoding.

- When encoding:

- Your program must take as input a graphic in any reasonable raster graphic format of your choice. We'll say that any raster format supported by ImageMagick counts as reasonable.

- Your program must output a message which can be represented in 140 or fewer Unicode code points; 140 code points in the range

U+0000–U+10FFFF, excluding non-characters (U+FFFE,U+FFFF,U+nFFFE,U+nFFFFwhere n is1–10hexadecimal, and the rangeU+FDD0–U+FDEF) and surrogate code points (U+D800–U+DFFF). It may be output in any reasonable encoding of your choice; any encoding supported by GNUiconvwill be considered reasonable, and your platform native encoding or locale encoding would likely be a good choice. See Unicode notes below for more details.

- When decoding:

- Your program should take as input the output of your encoding mode.

- Your program must output an image in any reasonable format of your choice, as defined above, though for output vector formats are OK as well.

- The image output should be an approximation of the input image; the closer you can get to the input image, the better.

- The decoding process may have no access to any other output of the encoding process other than the output specified above; that is, you can't upload the image somewhere and output the URL for the decoding process to download, or anything silly like that.

-

For the sake of consistency in user interface, your program must behave as follows:

- Your program must be a script that can be set to executable on a platform with the appropriate interpreter, or a program that can be compiled into an executable.

- Your program must take as its first argument either

encodeordecodeto set the mode. -

Your program must take input in one or more of the following ways (if you implement the one that takes file names, you may also read and write from stdin and stdout if file names are missing):

-

Take input from standard in and produce output on standard out.

my-program encode <input.png >output.txt my-program decode <output.txt >output.png -

Take input from a file named in the second argument, and produce output in the file named in the third.

my-program encode input.png output.txt my-program decode output.txt output.png

-

- For your solution, please post:

- Your code, in full, and/or a link to it hosted elsewhere (if it's very long, or requires many files to compile, or something).

- An explanation of how it works, if it's not immediately obvious from the code or if the code is long and people will be interested in a summary.

- An example image, with the original image, the text it compresses down to, and the decoded image.

- If you are building on an idea that someone else had, please attribute them. It's OK to try to do a refinement of someone else's idea, but you must attribute them.

Guidelines

These are basically rules that may be broken, suggestions, or scoring criteria:

- Aesthetics are important. I'll be judging, and suggest that other people judge, based on:

- How good the output image looks, and how much it looks like the original.

- How nice the text looks. Completely random gobbledigook is OK if you have a really clever compression scheme, but I also want to see answers that turn images into mutli-lingual poems, or something clever like that. Note that the author of the original solution decided to use only Chinese characters, since it looked nicer that way.

- Interesting code and clever algorithms are always good. I like short, to the point, and clear code, but really clever complicated algorithms are OK too as long as they produce good results.

- Speed is also important, though not as important as how good a job compressing the image you do. I'd rather have a program that can convert an image in a tenth of a second than something that will be running genetic algorithms for days on end.

- I will prefer shorter solutions to longer ones, as long as they are reasonably comparable in quality; conciseness is a virtue.

- Your program should be implemented in a language that has a freely-available implementation on Mac OS X, Linux, or Windows. I'd like to be able to run the programs, but if you have a great solution that only runs under MATLAB or something, that's fine.

- Your program should be as general as possible; it should work for as many different images as possible, though some may produce better results than others. In particular:

- Having a few images built into the program that it matches and writes a reference to, and then produces the matching image upon decoding, is fairly lame and will only cover a few images.

- A program that can take images of simple, flat, geometric shapes and decompose them into some vector primitive is pretty nifty, but if it fails on images beyond a certain complexity it is probably insufficiently general.

- A program that can only take images of a particular fixed aspect ratio but does a good job with them would also be OK, but not ideal.

- You may find that a black and white image can get more information into a smaller space than a color image. On the other hand, that may limit the types of image it's applicable to; faces come out fine in black and white, but abstract designs may not fare so well.

- It is perfectly fine if the output image is smaller than the input, while being roughly the same proportion. It's OK if you have to scale the image up to compare it to the original; what's important is how it looks.

- Your program should produce output that could actually go through Twitter and come out unscathed. This is only a guideline rather than a rule, since I couldn't find any documentation on the precise set of characters supported, but you should probably avoid control characters, funky invisible combining characters, private use characters, and the like.

Scoring rubric

As a general guide to how I will be ranking solutions when choosing my accepted solution, lets say that I'll probably be evaluating solutions on a 25 point scale (this is very rough, and I won't be scoring anything directly, just using this as a basic guideline):

-

15 points for how well the encoding scheme reproduces a wide range of input images. This is a subjective, aesthetic judgement

- 0 means that it doesn't work at all, it gives the same image back every time, or something

- 5 means that it can encode a few images, though the decoded version looks ugly and it may not work at all on more complicated images

- 10 means that it works on a wide range of images, and produces pleasant looking images which may occasionally be distinguishable

- 15 means that it produces perfect replicas of some images, and even for larger and more complex images, gives something that is recognizable. Or, perhaps it does not make images that are quite recognizable, but produces beautiful images that are clearly derived from the original.

-

3 points for clever use of the Unicode character set

- 0 points for simply using the entire set of allowed characters

- 1 point for using a limited set of characters that are safe for transfer over Twitter or in a wider variety of situations

- 2 points for using a thematic subset of characters, such as only Han ideographs or only right-to-left characters

- 3 points for doing something really neat, like generating readable text or using characters that look like the image in question

-

3 points for clever algorithmic approaches and code style

- 0 points for something that is 1000 lines of code only to scale the image down, treat it as 1 bit per pixel, and base64 encode that

- 1 point for something that uses a standard encoding technique and is well written and brief

- 2 points for something that introduces a relatively novel encoding technique, or that is surprisingly short and clean

- 3 points for a one liner that actually produces good results, or something that breaks new ground in graphics encoding (if this seems like a low number of points for breaking new ground, remember that a result this good will likely have a high score for aesthetics as well)

- 2 points for speed. All else being equal, faster is better, but the above criteria are all more important than speed

- 1 point for running on free (open source) software, because I prefer free software (note that C# will still be eligible for this point as long as it runs on Mono, likewise MATLAB code would be eligible if it runs on GNU Octave)

- 1 point for actually following all of the rules. These rules have gotten a bit big and complicated, so I'll probably accept otherwise good answers that get one small detail wrong, but I will give an extra point to any solution that does actually follow all of the rules

Reference images

Some folks have asked for some reference images. Here are a few reference images that you can try; smaller versions are embedded here, they all link to larger versions of the image if you need those:

Prize

I am offering a 500 rep bounty (plus the 50 that StackOverflow kicks in) for the solution that I like the best, based on the above criteria. Of course, I encourage everyone else to vote on their favorite solutions here as well.

Note on deadline

This contest will run until the bounty runs out, about 6 PM on Saturday, May 30. I can't say the precise time it will end; it may be anywhere from 5 to 7 PM. I will guarantee that I'll look at all entries submitted by 2 PM, and I will do my best to look at all entries submitted by 4 PM; if solutions are submitted after that, I may not have a chance to give them a fair look before I have to make my decision. Also, the earlier you submit, the more chance you will have for voting to be able to help me pick the best solution, so try and submit earlier rather than right at the deadline.

Unicode notes

There has also been some confusion on exactly what Unicode characters are allowed. The range of possible Unicode code points is U+0000 to U+10FFFF. There are some code points which are never valid to use as Unicode characters in any open interchange of data; these are the noncharacters and the surrogate code points. Noncharacters are defined in the Unidode Standard 5.1.0 section 16.7 as the values U+FFFE, U+FFFF, U+nFFFE, U+nFFFF where n is 1–10 hexadecimal, and the range U+FDD0–U+FDEF. These values are intended to be used for application-specific internal usage, and conforming applications may strip these characters out of text processed by them. Surrogate code points, defined in the Unicode Standard 5.1.0 section 3.8 as U+D800–U+DFFF, are used for encoding characters beyond the Basic Multilingual Plane in UTF-16; thus, it is impossible to represent these code points directly in the UTF-16 encoding, and it is invalid to encode them in any other encoding. Thus, for the purpose of this contest, I will allow any program which encodes images into a sequence of no more than 140 Unicode code points from the range U+0000–U+10FFFF, excluding all noncharacters and surrogate pairs as defined above.

I will prefer solutions that use only assigned characters, and even better ones that use clever subsets of assigned characters or do something interesting with the character set they use. For a list of assigned characters, see the Unicode Character Database; note that some characters are listed directly, while some are listed only as the start and end of a range. Also note that surrogate code points are listed in the database, but forbidden as mentioned above. If you would like to take advantage of certain properties of characters for making the text you output more interesting, there are a variety of databases of character information available, such as a list of named code blocks and various character properties.

Since Twitter does not specify the exact character set they support, I will be lenient about solutions which do not actually work with Twitter because certain characters count extra or certain characters are stripped. It is preferred but not required that all encoded outputs should be able to be transferred unharmed via Twitter or another microblogging service such as identi.ca. I have seen some documentation stating that Twitter entity-encodes <, >, and &, and thus counts those as 4, 4, and 5 characters respectively, but I have not tested that out myself, and their JavaScript character counter doesn't seem to count them that way.

Tips & Links

- The definition of valid Unicode characters in the rules is a bit complicated. Choosing a single block of characters, such as CJK Unified Ideographs (U+4E00–U+9FCF) may be easier.

- You may use existing image libraries, like ImageMagick or Python Imaging Library, for your image manipulation.

- If you need some help understanding the Unicode character set and its various encodings, see this quick guide or this detailed FAQ on UTF-8 in Linux and Unix.

- The earlier you get your solution in, the more time I (and other people voting) will have to look at it. You can edit your solution if you improve it; I'll base my bounty on the most recent version when I take my last look through the solutions.

- If you want an easy image format to parse and write (and don't want to just use an existing format), I'd suggest using the PPM format. It's a text based format that's very easy to work with, and you can use ImageMagick to convert to and from it.

image files and python source (version 1 and 2)

Version 1 Here is my first attempt. I will update as I go.

I have got the SO logo down to 300 characters almost lossless. My technique uses conversion to SVG vector art so it works best on line art. It is actually an SVG compressor, it still requires the original art go through a vectorisation stage.

For my first attempt I used an online service for the PNG trace however there are MANY free and non-free tools that can handle this part including potrace (open-source).

Here are the results

Original SO Logo http://www.warriorhut.org/graphics/svg_to_unicode/so-logo.png Original Decoded SO Logo http://www.warriorhut.org/graphics/svg_to_unicode/so-logo-decoded.png After encoding and decoding

Characters: 300

Time: Not measured but practically instant (not including vectorisation/rasterisation steps)

The next stage will be to embed 4 symbols (SVG path points and commands) per unicode character. At the moment my python build does not have wide character support UCS4 which limits my resolution per character. I've also limited the maximum range to the lower end of the unicode reserved range 0xD800 however once I build a list of allowed characters and a filter to avoid them I can theoretically push the required number of characters as low as 70-100 for the logo above.

A limitation of this method at present is the output size is not fixed. It depends on number of vector nodes/points after vectorisation. Automating this limit will require either pixelating the image (which removes the main benefit of vectors) or repeated running the paths through a simplification stage until the desired node count is reached (which I'm currently doing manually in Inkscape).

Version 2

UPDATE: v2 is now qualified to compete. Changes:

- Command-line control input/output and debugging

- Uses XML parser (lxml) to handle SVG instead of regex

- Packs 2 path segments per unicode symbol

- Documentation and cleanup

- Support style="fill:color" and fill="color"

- Document width/height packed into single character

- Path color packed into single character

- Color compression is acheived by throwing away 4bits of color data per color then packing it into a character via hex conversion.

Characters: 133

Time: A few seconds

v2 decoded http://www.warriorhut.org/graphics/svg_to_unicode/so-logo-decoded-v2.png After encoding and decoding (version 2)

As you can see there are some artifacts this time. It isn't a limitation of the method but a mistake somewhere in my conversions. The artifacts happen when the points go outside the range 0.0 - 127.0 and my attempts to constrain them have had mixed success. The solution is simply to scale the image down however I had trouble scaling the actual points rather than the artboard or group matrix and I'm too tired now to care. In short, if your points are in the supported range it generally works.

I believe the kink in the middle is due to a handle moving to the other side of a handle it's linked to. Basically the points are too close together in the first place. Running a simplify filter over the source image in advance of compressing it should fix this and shave of some unnecessary characters.

UPDATE: This method is fine for simple objects so I needed a way to simplify complex paths and reduce noise. I used Inkscape for this task. I've had some luck with grooming out unnecessary paths using Inkscape but not had time to try automating it. I've made some sample svgs using the Inkscape 'Simplify' function to reduce the number of paths.

Simplify works ok but it can be slow with this many paths.

autotrace example http://www.warriorhut.org/graphics/svg_to_unicode/autotrace_16_color_manual_reduction.png cornell box http://www.warriorhut.com/graphics/svg_to_unicode/cornell_box_simplified.png lena http://www.warriorhut.com/graphics/svg_to_unicode/lena_std_washed_autotrace.png

thumbnails traced http://www.warriorhut.org/graphics/svg_to_unicode/competition_thumbnails_autotrace.png

Here's some ultra low-res shots. These would be closer to the 140 character limit though some clever path compression may be need as well.

groomed http://www.warriorhut.org/graphics/svg_to_unicode/competition_thumbnails_groomed.png Simplified and despeckled.

trianglulated http://www.warriorhut.org/graphics/svg_to_unicode/competition_thumbnails_triangulated.png Simplified, despeckled and triangulated.

autotrace --output-format svg --output-file cornell_box.svg --despeckle-level 20 --color-count 64 cornell_box.png

ABOVE: Simplified paths using autotrace.

Unfortunately my parser doesn't handle the autotrace output so I don't know how may points are in use or how far to simplify, sadly there's little time for writing it before the deadline. It's much easier to parse than the inkscape output though.

Alright, here's mine: nanocrunch.cpp and the CMakeLists.txt file to build it using CMake. It relies on the Magick++ ImageMagick API for most of its image handling. It also requires the GMP library for bignum arithmetic for its string encoding.

I based my solution off of fractal image compression, with a few unique twists. The basic idea is to take the image, scale down a copy to 50% and look for pieces in various orientations that look similar to non-overlapping blocks in the original image. It takes a very brute force approach to this search, but that just makes it easier to introduce my modifications.

The first modification is that instead of just looking at ninety degree rotations and flips, my program also considers 45 degree orientations. It's one more bit per block, but it helps the image quality immensely.

The other thing is that storing a contrast/brightness adjustment for each of color component of each block is way too expensive. Instead, I store a heavily quantized color (the palette has only 4 * 4 * 4 = 64 colors) that simply gets blended in in some proportion. Mathematically, this is equivalent to a variable brightness and constant contrast adjustment for each color. Unfortunately, it also means there's no negative contrast to flip the colors.

Once it's computed the position, orientation and color for each block, it encodes this into a UTF-8 string. First, it generates a very large bignum to represent the data in the block table and the image size. The approach to this is similar to Sam Hocevar's solution -- kind of a large number with a radix that varies by position.

Then it converts that into a base of whatever the size of the character set available is. By default, it makes full use of the assigned unicode character set, minus the less than, greater than, ampersand, control, combining, and surrogate and private characters. It's not pretty but it works. You can also comment out the default table and select printable 7-bit ASCII (again excluding <, >, and & characters) or CJK Unified Ideographs instead. The table of which character codes are available is stored a run-length encoded with alternating runs of invalid and valid characters.

Anyway, here are some images and times (as measured on my old 3.0GHz P4), and compressed to 140 characters in the full assigned unicode set described above. Overall, I'm fairly pleased with how they all turned out. If I had more time to work on this, I'd probably try to reduce the blockiness of the decompressed images. Still, I think the results are pretty good for the extreme compression ratio. The decompressed images are bit impressionistic, but I find it relatively easy to see how bits correspond to the original.

Stack Overflow Logo (8.6s to encode, 7.9s to decode, 485 bytes): http://i44.tinypic.com/2w7lok1.png

http://i44.tinypic.com/2w7lok1.png

Lena (32.8s to encode, 13.0s to decode, 477 bytes):

http://i42.tinypic.com/2rr49wg.png http://i40.tinypic.com/2rhxxyu.png

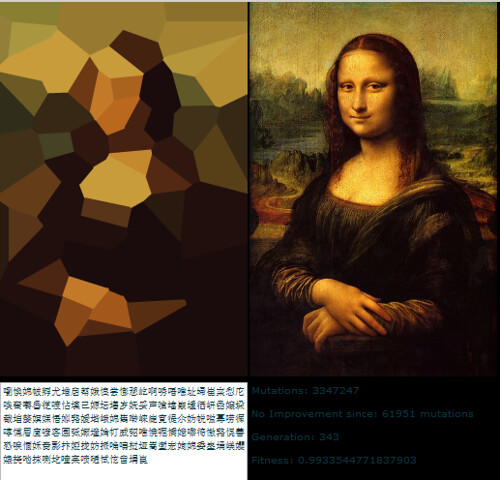

Mona Lisa (43.2s to encode, 14.5s to decode, 490 bytes):

http://i41.tinypic.com/ekgwp3.png http://i43.tinypic.com/ngsxep.png

Edit: CJK Unified Characters

Sam asked in the comments about using this with CJK. Here's a version of the Mona Lisa compressed to 139 characters from the CJK Unified character set:

http://i43.tinypic.com/2yxgdfk.png 咏璘驞凄脒鵚据蛥鸂拗朐朖辿韩瀦魷歪痫栘璯緍脲蕜抱揎頻蓼債鑡嗞靊寞柮嚛嚵籥聚隤慛絖銓馿渫櫰矍昀鰛掾撄粂敽牙稉擎蔍螎葙峬覧絀蹔抆惫冧笻哜搀澐芯譶辍澮垝黟偞媄童竽梀韠镰猳閺狌而羶喙伆杇婣唆鐤諽鷍鴞駫搶毤埙誖萜愿旖鞰萗勹鈱哳垬濅鬒秀瞛洆认気狋異闥籴珵仾氙熜謋繴茴晋髭杍嚖熥勳縿餅珝爸擸萿

The tuning parameters at the top of the program that I used for this were: 19, 19, 4, 4, 3, 10, 11, 1000, 1000. I also commented out the first definition of number_assigned and codes, and uncommented out the last definitions of them to select the CJK Unified character set.