Is it possible to balance load between multiple Nginx load balancers without using a hardware load balancer?

I plan to balance load between my app servers using a separate Nginx server as a software load balancer. But actually, it's because a load balancer in front of the app servers allows me to update an app server (OS, application, etc.) when I want without downtime.

Then I realized, how do I update the load balancer itself? i.e. my site is still going to go down when I need to update the OS and Nginx on the server that acts as the load balancer for my application.

As hard load balancing is out of question, I was wondering if it's possible to connect two Nginx webservers together so that they balance load across app servers together, while enabling me to update one at a time, when I need to, without causing downtime.

Is this realistic?

Solution 1:

You need common access point. This should be done with virtual addresses or routing. Best/easiest way to accomplish this without additional hardware and protocols is to use LVS.

LVS is kernel level IP balancer that can do the job and is very fast and effective, has impressive throughput. You can also configure filewall on the same node.

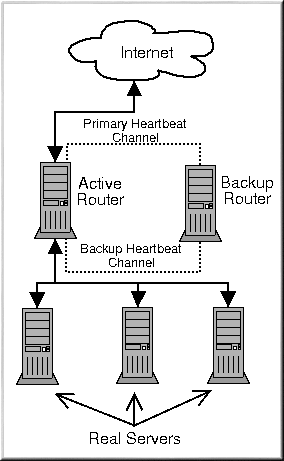

For your configuration, easiest way is to configure LVS-NAT with two nodes (active-backup), it supports heartbeat between nodes and uses virtual IP for both sides. If master node will fail, then slave one will push arp update to the switch and takeover IP address.

There are tons of configuration utilities, some distributions feature fancy utilities for configuration, ex. RHEL/Centos/Fedora has piranha web GUI.

For real servers you can configure timeouts, scheduling, ratios, monitoring, session persistence etc. Pretty flexible.

Also, it's good to share session info between nodes.

Recommended docs:

LVS official: http://www.linuxvirtualserver.org/Documents.html

LVS wiki: http://kb.linuxvirtualserver.org/wiki/Main_Page