Can you actually use Terminal to crash your computer?

Solution 1:

One way to crash a computer is to execute a so called fork-bomb.

You can execute it on a unix-sytem by:

:(){ :|: & };:

It's a command that will recursively spawn processes till the OS is so busy it won't respond to any action anymore.

Solution 2:

Not sure what you mean about 'crash'ing the computer - if you would re-phrase it to say 'render the computer unusable', then yes. Certainly all it takes is a single stray command - just a moment where you're not thinking clearly about what you're doing, similar to when you speak without thinking, and the damage can be immense and almost immediate. The classic example:

$ sudo rm -rf /

If you let that command run for even just one second, that can wipe out enough of your system to render it unbootable, and possibly cause irreversible data loss. Don't do it.

Solution 3:

Suppose you don't know what your doing and attempting to do a backup of some hard drive

dd if=/dev/disk1 of=/dev/disk2

Well if you mix those up (switch if and of), it will overwrite the fresh data with old data, no questions asked.

Similar mix ups can happen with archive utils. And frankly with most command line utilities.

If you want an example of a one character mix up that will crash your system take a look at this scenario: You want to move all the files in the current directory to another one:

mv -f ./* /path/to/other/dir

Let's accept the fact that you learned to use ./ to denote the current directory. (I do)

Well if you omit the dot, it will start moving all your files. Including your system files. You are lucky you didn't sudo this. But if you read somewhere that with 'sudo -i' you will never again have to type in sudo you are logged in as root now. And now your system is eating itself in front of your very eyes.

But again I think stuff like overwriting my precious code files with garbage, because I messed up one character or because I mixed up the order of parameters, is more trouble.

Let's say I want to check out the assembler code that gcc is generating:

gcc -S program.c > program.s

Suppose I already had a program.s and I use TAB completion. I am in a hurry and forget to TAB twice:

gcc -S program.c > program.c

Now I have the assembler code in my program.c and no c code anymore. Which is at least a real setback for some, but to others it's start-over-from-scratch-time.

I think these are the ones that will cause real "harm". I don't really care if my system crashes. I would care about my data being lost.

Unfortunately these are the mistakes that will have to be made until you learn to use the terminal with the proper precautions.

Solution 4:

Causing a kernel panic is a more akin to crashing than the other answers I've seen here thus far:

sudo dtrace -w -n "BEGIN{ panic();}"

(code taken from here and also found in Apple's own documentation)

You might also try:

sudo killall kernel_task

I haven't verified that the second one there actually works (and I don't intend to as I actually have some work open right now).

Solution 5:

Modern macOS makes it really hard to crash your machine as an unprivileged user (i.e. without using sudo), because UNIX systems are meant to handle thousands of users without letting any of them break the whole system. So, thankfully, you'll usually have to be prompted before you do something that destroys your machine.

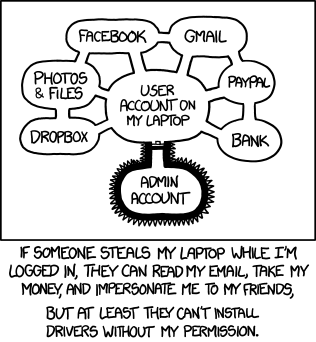

Unfortunately, that protection only applies to the system itself. As xkcd illustrates, there's lots of stuff that you care about that isn't protected by System Integrity Protection, root privileges or password prompts:

So, there's tons of stuff you can type in that will just wreck your user account and all your files if you aren't careful. A few examples:

-

rm -rf ${TEMPDIR}/*. This seems totally reasonable, until you realize that the environment variable is speltTMPDIR.TEMPDIRis usually undefined, which makes thisrm -rf /. Even withoutsudo, this will happily remove anything you have delete permissions to, which will usually include your entire home folder. If you let this run long enough, it'll nuke any drive connected to your machine, too, since you usually have write permissions to those. -

find ~ -name "TEMP*" -o -print | xargs rm.findwill normally locate files matching certain criteria and print them out. Without the-othis does what you'd expect and deletes every file starting withTEMP*(as long as you don't have spaces in the path). But, the-omeans "or" (not "output" as it does for many other commands!), causing this command to actually delete all your files. Bummer. -

ln -sf link_name /some/important/file. I get the syntax for this command wrong occasionally, and it will rather happily overwrite your important file with a useless symbolic link. -

kill -9 -1will kill every one of your programs, logging you out rather quickly and possibly causing data loss.