How to install pytorch FROM SOURCE (with cuda enabled for a deprecated CUDA cc 3.5 of an old gpu) using anaconda prompt on Windows 10?

I have (with the help of the deviceQuery executable in C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\vX.Y\extras\demo_suite

according to https://forums.developer.nvidia.com/t/what-is-the-compute-capability-of-a-geforce-gt-710/146956/4):

Detected 1 CUDA Capable device(s)

Device 0: "GeForce GT 710" CUDA Driver Version / Runtime Version 11.0 / 11.0 CUDA Capability Major/Minor version number: 3.5 Total amount of global memory: 2048 MBytes (2147483648 bytes) ( 1) Multiprocessors, (192) CUDA Cores/MP: 192 CUDA Cores

As this is an old and underpowered graphics card, I need to install pytorch from source by compiling it on my computer with various needed settings and conditions - a not very intituitive thing which took me days. At least, my card supports CUDA cc 3.5 and thus it supports all of the latest CUDA and cuDNN versions, as cc 3.5 is just deprecated, nothing worse.

I followed the steps from README for building pytorch from source at https://github.com/pytorch/pytorch#from-source which also links to the right compiler at https://gist.github.com/ax3l/9489132.

I have succeeded in building PyTorch from source on Windows 10 (as described in pytorch repo readme.md: https://github.com/pytorch/pytorch#from-source), and I’m getting an error when running import pytorch:

ImportError: DLL load failed: A dynamic link library (DLL) initialization routine failed. Error loading "C:\Users\Admin\anaconda3\envs\ml\lib\site-packages\torch\lib\caffe2_detectron_ops_gpu.dll" or one of its dependencies.

I cannot use the pytorch that was built successfully from source: (DLL) initialization routine failed. Error loading caffe2_detectron_ops_gpu.dll

Solution 1:

EDIT: Before you try the long guide and install everything again, you might solve the error "(DLL) initialization routine failed. Error loading caffe2_detectron_ops_gpu.dll" by downgrading from torch = 1.7.1 to torch=1.6.0, according to this (without having tested it).

This is a selection of guides that I used.

- https://github.com/pytorch/pytorch#from-source

- https://discuss.pytorch.org/t/pytorch-build-from-source-on-windows/40288

- https://www.youtube.com/watch?v=sGWLjbn5cgs

- https://github.com/pytorch/pytorch/issues/30910

- https://github.com/exercism/cpp/issues/250

The solution here was drawn from many more steps, see this in combination with this. An overall start for cuda questions is on this related Super User question as well.

Here is the solution:

- Install cmake: https://cmake.org/download/

Add to PATH environmental variable:

C:\Program Files\CMake\bin

- Install git, which includes mingw64 which also delivers curl: https://git-scm.com/download/win

Add to PATH environmental variable:

C:\Program Files\Git\cmd

C:\Program Files\Git\mingw64\bin for curl

- As the compiler, I chose

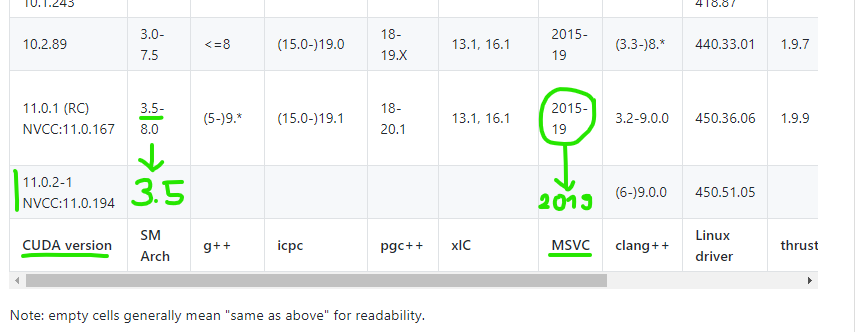

MSVC 2019for the CUDA compiler driverNVCC:10.0.194since that can handle CUDA cc 3.5 according to https://gist.github.com/ax3l/9489132. Of course, you will want to check your own current driver version.

Note that the green arrows shall tell you nothing else here than that the above cell is copied to an empty cell below, this is by design of the table and has nothing else to say here. The green marks and notes are just the relevant version numbers (3.5 and 2019) in my case. Instead, what is relevant in your case is totally up to your case!

Running MS Visual Studio 2019 16.7.1 and choosing --> Indivudual components lets you install:

- most recent

MSVC v142 - VS 2019 C++-x64/x86-Buildtools (v14.27)(the most recent x64 version at that time) - most recent

Windows 10 SDK(10.0.19041.0) (the most recent x64 version at that time).

-

As my graphic card's

CUDA Capability Major/Minor version numberis3.5, I can install the latest possiblecuda 11.0.2-1available at this time. In your case, always look up a current version of the previous table again and find out the best possible cuda version of your CUDA cc. The cuda toolkit is available at https://developer.nvidia.com/cuda-downloads. -

Change the PATH environmental variable:

SET PATH=C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v11.0\bin;%PATH%

SET PATH=C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v11.0\extras\CUPTI\lib64;%PATH%

SET PATH=C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v11.0\include;%PATH%

- Download cuDNN from https://developer.nvidia.com/cudnn-download-survey. You have to register to do this. Then install cuDNN by copying the latest cuDNN zip-extract to the following directory:

C:\Program Files\NVIDIA cuDNN

- Change the PATH environmental variable:

SET PATH=C:\Program Files\NVIDIA cuDNN\cuda;%PATH

- open anaconda prompt and at best create a new virtual environment for pytorch with a name of your choice, according to https://stackoverflow.com/questions/48174935/conda-creating-a-virtual-environment:

conda create -n myenv

- Install perhaps needed packages:

(myenv) C:\Users\Admin>conda install numpy ninja pyyaml mkl mkl-include setuptools cmake cffi typing_extensions future six requests

-

In anaconda or cmd prompt, clone pytorch into a directory of your choice. I am using my Downloads directory here:

C:\Users\Admin\Downloads\Pytorch>git clone https://github.com/pytorch/pytorch -

In anaconda or cmd prompt, recursively update the cloned directory:

C:\Users\Admin\Downloads\Pytorch\pytorch>git submodule update --init --recursive -

Since there is poor support for MSVC OpenMP in detectron, we need to build pytorch from source with MKL from source so Intel OpenMP will be used, according to this developer's comment and referring to https://pytorch.org/docs/stable/notes/windows.html#include-optional-components. So how to do this?

Install 7z from https://www.7-zip.de/download.html.

Add to PATH environmental variable:

C:\Program Files\7-Zip\

Now download the MKL source code (please check the most recent version in the link again):

curl https://s3.amazonaws.com/ossci-windows/mkl_2020.0.166.7z -k -O

7z x -aoa mkl_2020.0.166.7z -omkl

My chosen destination directory was C:\Users\Admin\mkl.

Also needed according to the link:

conda install -c defaults intel-openmp -f

- open anaconda prompt and activate your whatever called virtual environment:

activate myenv

- Change to your chosen pytorch source code directory.

(myenv) C:\WINDOWS\system32>cd C:\Users\Admin\Downloads\Pytorch\pytorch

- Now before starting cmake, we need to set a lot of variables.

As we use mkl as well, we need it as follows:

(myenv) C:\Users\Admin\Downloads\Pytorch\pytorch>set “CMAKE_INCLUDE_PATH=C:\Users\Admin\Downloads\Pytorch\mkl\include”

(myenv) C:\Users\Admin\Downloads\Pytorch\pytorch>set “LIB=C:\Users\Admin\Downloads\Pytorch\mkl\lib;%LIB%”

(myenv) C:\Users\Admin\Downloads\Pytorch\pytorch>set USE_NINJA=OFF

(myenv) C:\Users\Admin\Downloads\Pytorch\pytorch>set CMAKE_GENERATOR=Visual Studio 16 2019

(myenv) C:\Users\Admin\Downloads\Pytorch\pytorch>set USE_MKLDNN=ON

(myenv) C:\Users\Admin\Downloads\Pytorch\pytorch>set “CUDAHOSTCXX=C:\Program Files (x86)\Microsoft Visual Studio\2019\Community\VC\Tools\MSVC\14.27.29110\bin\Hostx64\x64\cl.exe”

(myenv) C:\Users\Admin\Downloads\Pytorch\pytorch>python setup.py install --cmake

Mind: Let this run through the night, the installer above took 9.5 hours and blocks the computer.

Important: Ninja can parallelize CUDA build tasks.

It might be possible that you can use ninja, which is to speed up the process according to https://pytorch.org/docs/stable/notes/windows.html#include-optional-components. In my case, the install did not succeed using ninja. You still may try: set CMAKE_GENERATOR=Ninja (of course after having installed it first with pip install ninja). You might also need set USE_NINJA=ON, and / or even better, try to leave out set USE_NINJA completely and use just set CMAKE_GENERATOR=Ninja (see Switch CMake Generator to Ninja), perhaps this will work for you. It is definitely possible to use ninja, see this comment of a successful ninja-based installation.

[I might also be wrong in expecting ninja to work by a pip install in my case. Perhaps we also need to get the source code of ninja instead, perhaps also using curl, as was done for MKL. Please comment or edit if you know more about it, thank you.]

-

In my case, this has run through using mkl and without using ninja.

-

Now a side-remark. If you are using spyder, mine at least was corrupted by the cuda install:

(myenv) C:\WINDOWS\system32>spyder cffi_ext.c C:\Users\Admin\anaconda3\lib\site-packages\zmq\backend\cffi_pycache_cffi_ext.c(268): fatal error C1083: Datei (Include) kann nicht geöffnet werden: "zmq.h": No such file or directory Traceback (most recent call last): File "C:\Users\Admin\anaconda3\Scripts\spyder-script.py", line 6, in from spyder.app.start import main File "C:\Users\Admin\anaconda3\lib\site-packages\spyder\app\start.py", line 22, in import zmq File "C:\Users\Admin\anaconda3\lib\site-packages\zmq_init_.py", line 50, in from zmq import backend File "C:\Users\Admin\anaconda3\lib\site-packages\zmq\backend_init_.py", line 40, in reraise(*exc_info) File "C:\Users\Admin\anaconda3\lib\site-packages\zmq\utils\sixcerpt.py", line 34, in reraise raise value File "C:\Users\Admin\anaconda3\lib\site-packages\zmq\backend_init_.py", line 27, in ns = select_backend(first) File "C:\Users\Admin\anaconda3\lib\site-packages\zmq\backend\select.py", line 28, in select_backend mod = import(name, fromlist=public_api) File "C:\Users\Admin\anaconda3\lib\site-packages\zmq\backend\cython_init.py", line 6, in from . import (constants, error, message, context, ImportError: DLL load failed while importing error: Das angegebene Modul wurde nicht gefunden.

Installing spyder over the existing installation again:

(myenv) C:\WINDOWS\system32>conda install spyder

Opening spyder:

(myenv) C:\WINDOWS\system32>spyder

- Test your pytorch install.

I did it according to this:

import torch

torch.__version__

Out[3]: '1.8.0a0+2ab74a4'

torch.cuda.current_device()

Out[4]: 0

torch.cuda.device(0)

Out[5]: <torch.cuda.device at 0x24e6b98a400>

torch.cuda.device_count()

Out[6]: 1

torch.cuda.get_device_name(0)

Out[7]: 'GeForce GT 710'

torch.cuda.is_available()

Out[8]: True