NVMe ssd: Why is 4k writing faster than reading?

4k reads are going to be about the hardest thing the drive can do. They are amongst the smallest block sizes the drive is going to be able to handle, and there's no way for the drive to preload large quantities of data, in fact they are probably quite inefficient if the drive load-ahead logic is intending to read anything larger than 4kb.

"Normal" drive reads are more likely to be larger than 4kb as there are very few files that are that small, and even the page file is likely to be read in large chunks as it would be odd for a program to have "only" 4KB of memory paged out. This means that any preloading that the drive tries to do will actually penalise the drive throughput.

4K reads might pass through the drive buffer, but the "random" part of the test makes them entirely unpredictable. The controller won't know when the drive might need the more usual "large" reads again.

4K writes on the other hand can be buffered, queued, and written out sequentially in an efficient manner. The drive buffer can do a lot of the catch-and-write work that it was designed for, and the wear leveller might even allocate all those 4K writes to the same drive erase block, occasionally turning what is a 4K "random" write into something closer to a sequential write.

In fact I suspect that this is what is happening in the "4K-64Thrd" writes, the "64-Thrd" is apparently using a large queue depth, thus signalling to the drive that it has a large amount of data to read or write. This triggers a lot of clustering of writes and so approaches the sequential write speed of the drive. There is still an overhead to performing a 4K write, but now you are fully exposing the potential of the buffer. In the Read version of the test the drive controller, now recognising that it is under very constant heavy load, stops preloading data, possibly avoids the buffer and instead switches to a "raw" read mode, again approaching the sequential read speed.

Basically the drive controller can do something to make a 4K write more efficient, especially if a cluster of them arrive at a similar time, while it can't do anything to make a single 4K read more efficient, especially if it is trying to optimise dataflow by pre-loading data into the cache.

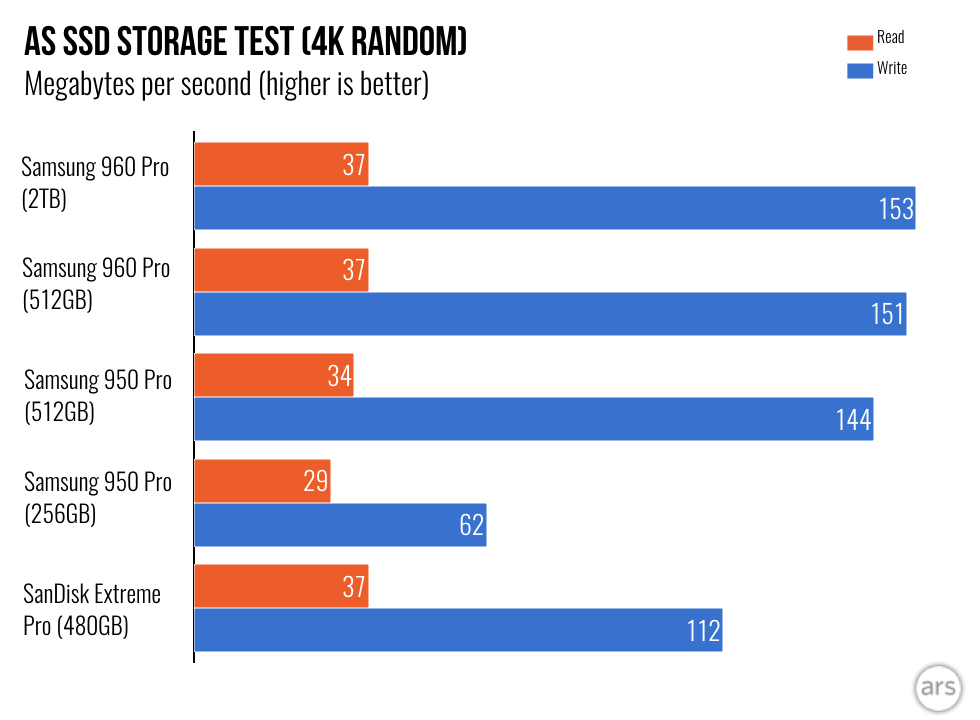

Other answers have already explained why it may be that writing is faster than reading; I would like to add that for this drive this is absolutely normal, as it is confirmed by benchmarks that you can find in reviews.

ArsTecnica's review

ArsTechnica has reviewed the drive, both your version (512 GB) and the 2 TB one:

(This graph is not immediately visible in the review, it's the 5th one in the first gallery, you have to click on it)

(This graph is not immediately visible in the review, it's the 5th one in the first gallery, you have to click on it)

The performance of these 2 models is very similar, and their numbers look like yours: the drive can read at 37 MB/s and write at 151 MB/s.

AnandTech's review

AnandTech has also reviewed the drive: they used the 2TB model, averaging the results of tests with a queue depth of 1, 2 and 4. These are the graphs:

The drive reads at 137 MB/s and writes at 437 MB/s. The number are much higher than yours, but it's probably due to the higher queue depths. Anyway the write speed is 3 times the read speed, as in your case.

PC World's review

One more review, by PC World: they have tested the 1 TB version, and the results for 4K are 30 MB/s for reading and 155 MB/s for writing:

The write speed is in line with yours, but here the drive is even slower at reading. The result is that the ratio is five to one, not three to one.

The write speed is in line with yours, but here the drive is even slower at reading. The result is that the ratio is five to one, not three to one.

Conclusion

Reviews confirm that for this drive it is normal that the write speed for random 4K is much faster than the read speed: depending on the test, it can even be 5 times faster.

Your drive is fine. There's no reason to believe it is faulty, or that your system has a problem.

SSD controller caches writes in the onboard NVRAM, and flushes it to flash media at opportune times. Write latency is thus the cache access latency, typically 20us. Reads, on the contrary, are served off the media, with access time of 120-150us at best.

Expanding on Andrey's answer, you need to look at the overhead involved before the SSD can signal to the computer that the operation is complete.

For a write, the data must merely be written to an internal RAM cache. Later it will be written to flash memory, along with other 4k blocks and metadata needed to check, error correct and locate it.

For a read, the SSD must first locate the data. The location that the computer wants to read is called the logical address, and does not have a direct relationship with the physical location of the data in flash memory. The SSD translates the logical address into a physical one, based on the geometry of the flash memory (the way the cells are arranged), bad block remapping, wear levelling and various other factors. It then has to wait for any other operations to finish before retrieving the data from flash, then checking it and if required re-reading and applying error correction, possibly even re-writing the whole block somewhere else.

While the total time taken by a write operation may be longer than a typical read operation, the time taken for the SSD to report that the operation completed to the extent that it can process further commands is lower. With large blocks the overhead is not the limiting factor, but with many small blocks it starts to limit read/write speed.